Better Prompts for Google Gemini: 5 Techniques That Actually Work

I recently came across an insightful X post from Google AI developers that caught my attention. The post detailed five specific prompting techniques designed to get better results from Google Gemini thinking models like Gemini 2.5 Flash.

These aren’t typical vague suggestions but practical methods that can significantly improve your interactions with these AI models.

I’m sharing these techniques to help others who might be working with Gemini and looking to get more refined outputs.

Why Prompt Engineering Matters for Gemini

Google’s Gemini models (especially the “thinking” variants like 2.5 Flash) respond differently based on how you structure your requests. A slight change in wording or format can mean the difference between a mediocre answer and an exceptional one.

The right prompt saves time and gets better results. The wrong one leads to frustration and multiple attempts to get what you need.

The Five Techniques Highlighted by Google AI Developers

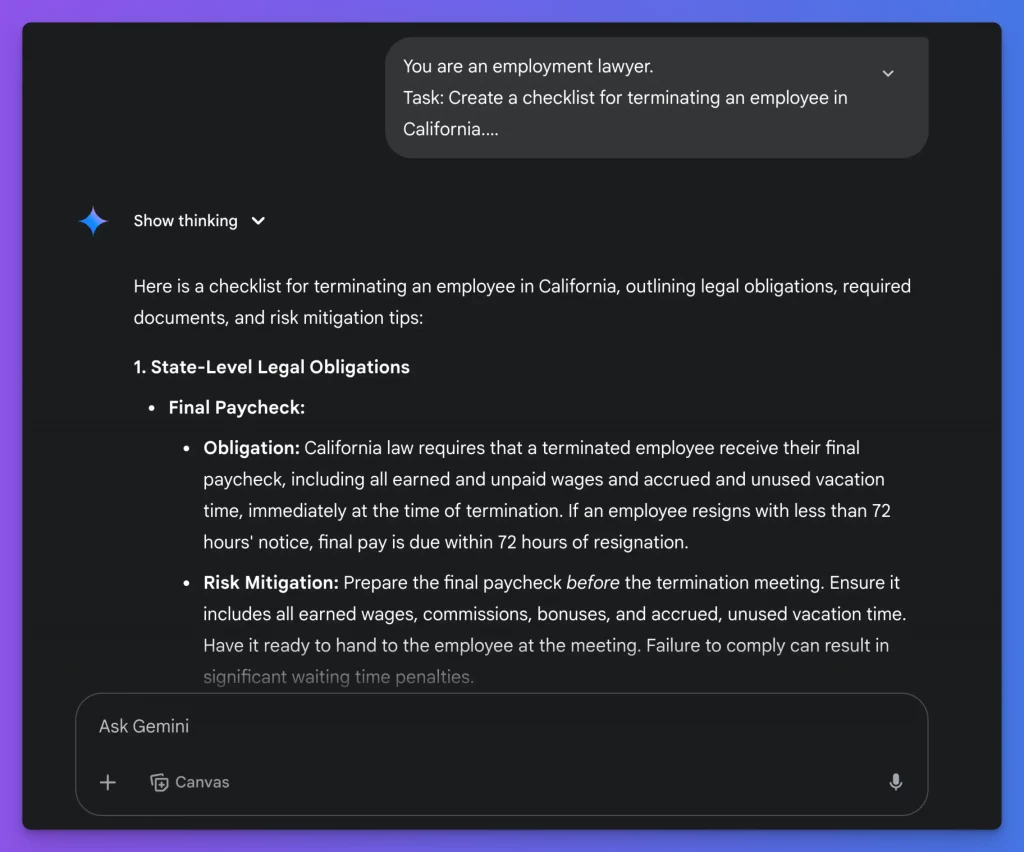

1. Give Step-by-Step Instructions

What it is: Breaking down complex tasks into clear, sequential steps.

Why it works: This approach prevents Gemini from skipping important reasoning steps. It guides the model to tackle problems methodically rather than jumping straight to conclusions.

How to do it: List specific steps you want the model to follow in numbered order. Be clear about what you want at each stage.

Example:

You are an employment lawyer.

Task: Create a checklist for terminating an employee in California.

Steps:

1. List all state-level legal obligations.

2. List federal obligations.

3. Outline required documents.

4. Give a short risk-mitigation tip for each step.

Return the answer as numbered sections with bullet points inside each section.

If Gemini’s first attempt misses the mark, adding more detailed steps in your follow-up prompt can help redirect it.

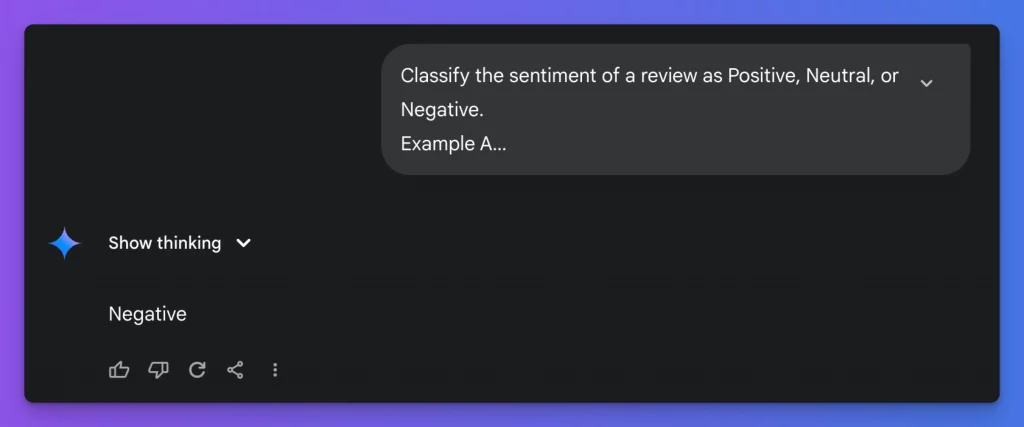

2. Use Multishot Prompting

What it is: Showing Gemini several examples of inputs and desired outputs before asking your actual question.

Why it works: This technique helps Gemini recognize patterns without having to explain everything in words. It’s particularly useful when you want the model to follow specific formatting or classification rules.

How to do it: Provide 2-4 clear examples of the input-output pairs you want, then ask your real question.

Example:

Classify the sentiment of a review as Positive, Neutral, or Negative.

Example A

Review: “Shipping was lightning-fast and the shoes fit perfectly.”

Sentiment: Positive

Example B

Review: “The packaging was fine, but the product felt average.”

Sentiment: Neutral

Example C

Review: “The screen cracked after one day and support ignored me.”

Sentiment: Negative

Now classify: “It works, but the battery drains in four hours.”

Respond with only the sentiment label.

The developers note this works much better than just asking for a sentiment analysis without examples.

3. Define Output Format and Behavior

What it is: Setting clear expectations about how you want Gemini to respond and what role it should play.

Why it works: This creates boundaries for the response, making it more predictable and useful. It also helps Gemini adopt the right tone and expertise level.

How to do it: Specify a system role (like expert, teacher, etc.) and the exact format you need (tables, JSON, bullet points).

Example:

System instructions:

• You are a friendly nutrition coach.

• Answer in valid JSON with keys: “meal_plan”, “calories”, “notes”.

• Keep total daily calories at 2100 ± 50.

User: Create a one-day vegetarian meal plan suitable for a runner training for a half-marathon.

This approach gives you well-structured data that’s easier to use than an unformatted response.

4. Request Debugging and Self-Checking

What it is: Asking Gemini to show its work and verify its own answers.

Why it works: According to the Google AI developers, this slows down the model’s thinking, reducing errors. It also lets you spot mistakes in the reasoning process and correct them specifically.

How to do it: Ask the model to solve problems step by step and verify each part of its answer.

Example:

Solve the puzzle step by step.

Question: “A cyclist travels 30 km at 20 km/h and then 20 km at 10 km/h. What is the average speed for the whole trip?”

After solving, run a brief self-check: state any assumption you made and confirm each numeric step. End with “Final answer: X km/h”.

The developers suggest that when you see a mistake, you can send a follow-up with specific corrections like “Re-check your math in step 3” rather than starting over.

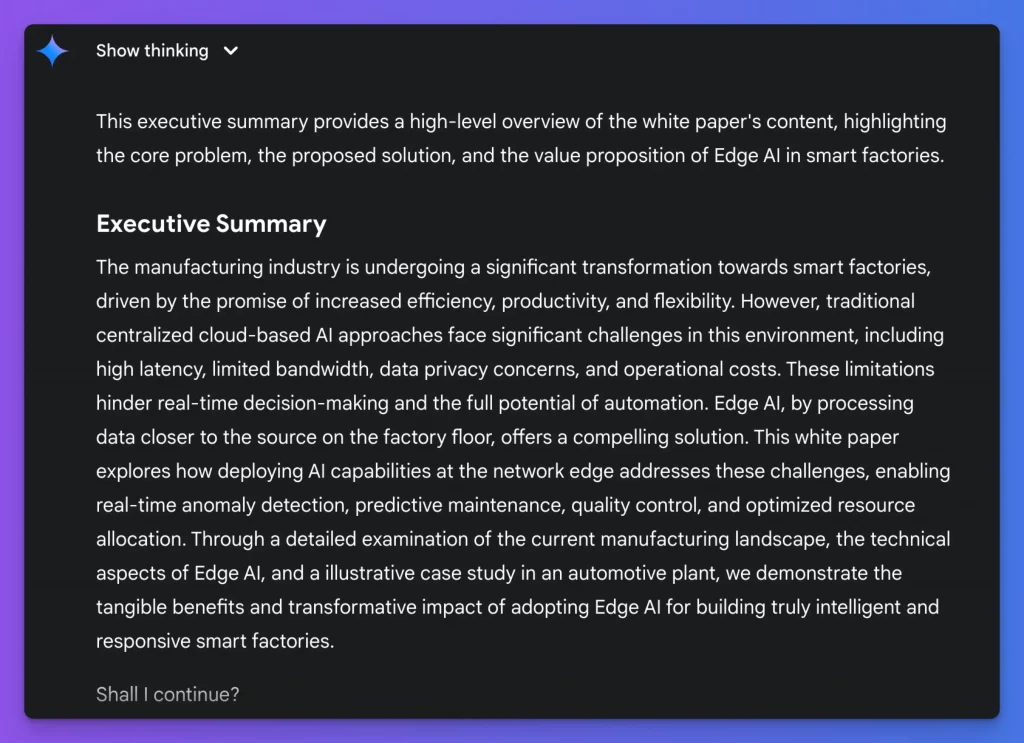

5. Manage Long Outputs Effectively

What it is: Techniques to control lengthy responses for better quality and efficiency.

Why it works: Long responses use up tokens quickly and can lose focus. Setting specific constraints helps keep answers useful and on-target.

How to do it: Specify section lengths, add checkpoints, and request specific structures.

Example:

Write a 1500-word white-paper draft on “Edge AI in Smart Factories”.

Structure:

• Executive summary (120 words)

• Current challenges (300)

• Edge AI solutions (400)

• Case study: automotive plant (400)

• Key takeaways (280)

Before each section, add a one-sentence note explaining why the point is included.

Stop after the executive summary and ask: “Shall I continue?”

This approach gives you control points to review the content before proceeding further.

Common Pitfalls to Watch For

Even with these techniques, there are some issues to be aware of:

- Being too vague: “Write better” doesn’t help. “Use active voice and keep sentences under 15 words” does.

- Contradictory instructions: Asking for “comprehensive but brief” creates confusion. Be specific about priorities.

- Forgetting to specify format: Without format guidance, you might get a wall of text when you needed bullet points.

- Not providing context: The more background you give, the better the results will be.

A Useful Template Based on These Techniques

Here’s a template that incorporates multiple techniques:

Context: [Add relevant background]

Role: You are a [specific expertise]

Task: [Clear description]

Steps:

1. [Step one]

2. [Step two]

3. […]

Format: [Exact output structure]

Examples: [1-2 input-output pairs if needed]

This structured approach should help get better results from Gemini thinking models.

These five techniques shared by Google AI developers offer a more structured way to interact with Google Gemini. They focus on being specific and clear in your requests, which seems to be key to getting quality responses.

The Google team’s insights highlight how important it is to guide AI models with the right prompting techniques. The clearer you are about what you want and how you want it, the better Gemini performs.

If you’re working with Gemini models, these techniques are worth trying to see how they improve your results.