How to Identify AI Photos using Gemini

I’ve noticed something lately that honestly caught me off guard. The AI-generated images out there are becoming incredibly realistic. Google just released their Nano Banana Pro image model, and the results are almost impossible to distinguish from actual photographs.

You’ll look at a photo and have no idea whether a human took it or an algorithm created it. It’s a real problem, and it’s only getting worse.

But here’s the good news: Google has a solution. They’ve built verification tools right into Gemini that can tell you whether an image was created using Google’s AI. If you’re tired of wondering whether what you’re seeing is real or fake, this is worth knowing about.

I should mention upfront that this method only works for images generated by Google’s AI products. If someone used a different AI tool to create an image, Gemini won’t be able to verify it using this method. But for Google-generated content, this is actually pretty useful.

What’s Really Going On

Google has been thinking about this problem for a while. They know that AI images are becoming mainstream, and they want people to be able to figure out what’s real and what isn’t.

They’ve created something called SynthID, essentially a digital watermarking system that quietly embeds signals into AI-generated images. You can’t see these signals with your eyes, but they’re there.

Since 2023, Google has been using SynthID to watermark over 20 billion pieces of AI-generated content. That’s a lot of marked images floating around. The company has also been testing a SynthID Detector with journalists and media professionals to make sure it actually works in real situations.

So when you’re sitting there trying to figure out if an image is real, Google’s tools are designed to help answer that question.

How to Actually Check an Image

This is the part that’s useful. The process is straightforward.

First, you get an image that you’re skeptical about. Maybe you found it on social media, or someone sent it to you, or you stumbled upon it online. You’re not sure whether it’s real or AI-generated.

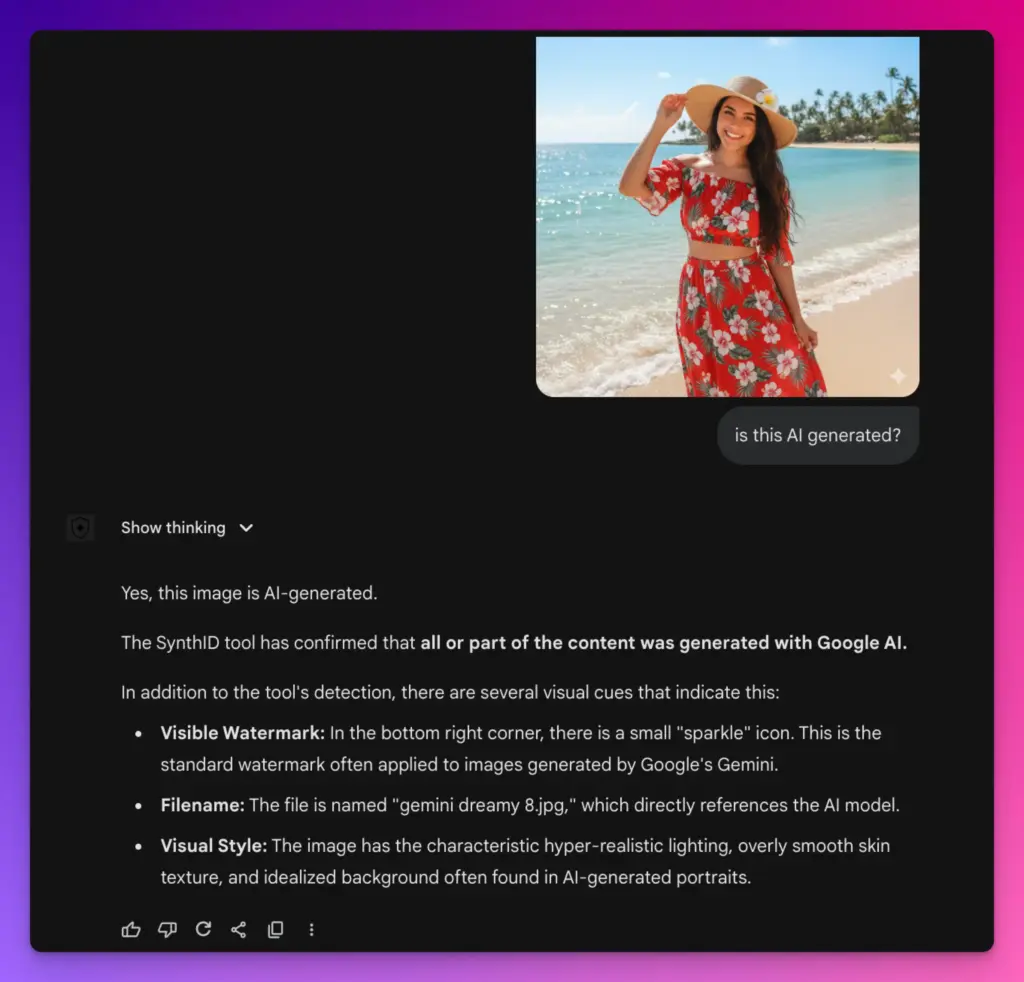

Open the Gemini app on your phone or computer. Upload the image you want to check. Then ask Gemini a simple question like “Was this created with Google AI?” or “Is this AI-generated?”

Gemini will then look for the SynthID watermark in the image. It combines this watermark detection with its own reasoning abilities to give you an answer. You’ll get back a response that tells you whether the image appears to have been created using Google’s AI tools.

That’s it. No complicated steps. No special software. Just upload and ask.

The Limits of This Approach

I want to be honest about something. This only works for images made by Google’s AI. If someone used Midjourney, DALL-E, Stable Diffusion, or any other AI image generator, Gemini won’t be able to verify those images using this method.

The watermark only exists in images created through Google’s tools—things like the Gemini app itself, Vertex AI, or Google Ads. So if you’re trying to verify whether an image came from a competitor’s AI system, this won’t help you.

That said, Google is planning to expand this. They’re working on supporting additional file types beyond images. Videos and audio are coming next. And they’re collaborating with other companies through something called the Coalition for Content Provenance and Authenticity to create industry-wide standards for tracking where content comes from.

Why This Matters

We’re living in a time when fake images are becoming indistinguishable from real ones. Social media can spread false information incredibly fast. News outlets accidentally publish AI-generated images. People use AI to create misleading content for all kinds of reasons.

Having a way to verify whether an image came from Google’s AI system is one step toward making the internet a little more transparent. It’s not a complete solution, there’s still plenty of AI-generated content from other sources that you can’t verify this way. But it’s something.

Google isn’t stopping here. They’ve said they’ll expand SynthID verification to work with content credentials created under a broader industry standard. Eventually, you might be able to check images created by other companies’ AI systems too, not just Google’s.

They’re also integrating these verification tools into other Google products. Google Search will eventually help you verify whether images in search results are AI-generated. YouTube might have these tools. Google Photos could use them. The company is treating this as a priority across their entire product lineup.

Images generated by Nano Banana Pro already come with metadata that shows how they were created. This transparency is part of Google’s broader effort to make AI in general more honest and less deceptive.

The internet is getting messier when it comes to figuring out what’s real. Having tools like this helps at least a little. And as Google expands these capabilities, the verification process should become even more useful for identifying AI-generated content across the board.

It’s not a perfect solution, but it’s a step in the right direction.